For years, SEO professionals have warned against duplicate content.

Phrases like, “Just don’t do it”, “Make sure every page’s content is unique”, “You don’t want to be penalized for duplicate content” have been heard ‘round the world.

What Is Duplicate Content?

According to Moz, “Duplicate content is content that appears on the Internet in more than one place (URL).” In fact, the previous sentence is technically duplicated content; however, I have given it proper recognition, so we should be OK.

Here’s the thing about duplicate content: sometimes it’s unavoidable. For instance, a website won’t likely have various copies of terms and condition pages, and even a printer-friendly page of a website could technically be seen as duplicate content. And what about news sites and websites dedicated to publishing the content of others? That content could all be called duplicate content, too.

So what should websites do about that content? Let’s watch a recent video from Matt Cutts, head of webspam at Google AKA The Google Webmaster:

Here’s what stands out most:

- Duplicate content happens all the time on the web, but it’s not all spam and Google doesn’t see it that way

- Duplicate content is not really treated as spam; it’s treated as something that needs to be considered and ranked appropriately

- If you do nothing but spammy and manipulative, duplicate content, Google reserves the right to penalize your site

Moral of the story: write content for your users, not the search engines, and avoid, spammy, keyword stuffed content.

Tools to Detect Duplicate Content

If you’re wondering how to detect duplicate content, here are a few resources for you:

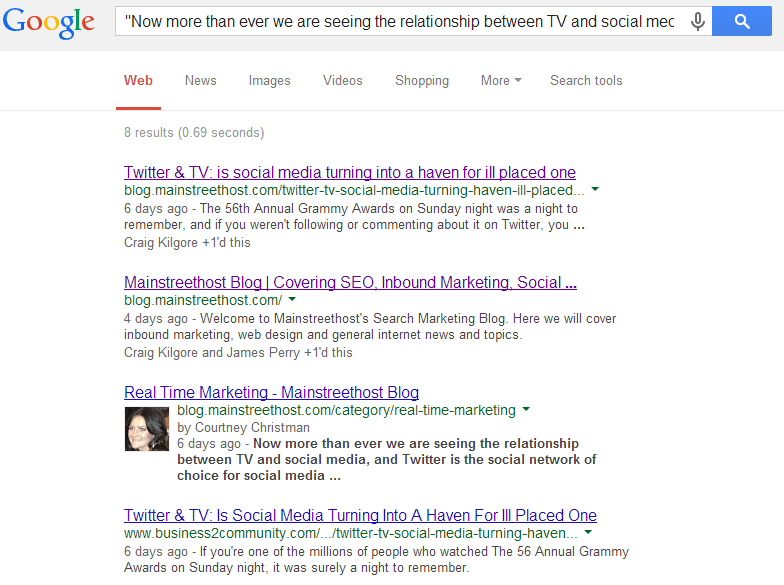

One of the easiest ways to detect duplicate content is by copying and pasting a snippet of your content into Google within quotes. If results come back with the exact same content, you know you have duplicate content.

In the example below, I took a snippet of a recent blog post and pasted it into Google’s search. As you can see, the results are from the Mainstreethost blog and Business 2 Community, so it isn’t the harmful kind of duplicated content.

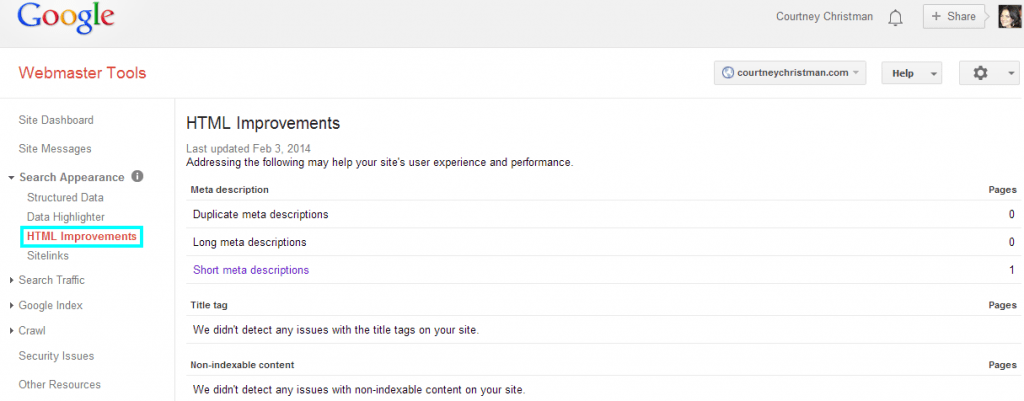

Google Webmaster Tools

If Google views content on your website as duplicate content, including your titles and descriptions, they will notify you in Webmaster Tools.

Copyscape and PlagSpotter

Two of many options to detect and monitor duplicate content, PlagSpotter and Copyscape allow you to copy and paste page URLs to check for duplicate content.

Go ahead and check your website’s content…

What Should I Do If I Have Duplicate Content on My Website?

If you have duplicate content on your website or even various copies of your website, here are a few suggestions to remedy the situation:

301 Redirect

If you have duplicate content, one way to fix this is through setting up a 301 redirect. This is where you redirect the duplicated content’s page to the original page of content. This eliminates the pages “competing” with each other to rank, thus strengthening the rank of the original page and making it more relevant for the search engines.

Rel=”canonical” Tag

A rel=”canonical” tag is very similar to 301 redirects; however, it often takes much less time to implement. Here is an example of a rel=”canonical” tag from Moz:

<link href=”http://www.example.com/canonical-version-of-page/” rel=”canonical” />

By putting this meta tag into the header of the duplicated content page, it tells the search engines to treat it as a copy of the URL provided and that all the “power” it has should be passed onto the given URL.

noindex, follow Meta Tags

Implement the “noindex, follow” meta tag for pages that shouldn’t show up in the search engine index. The search engine bots will still be able to crawl the links on the page, but it won’t include them in the index.

Acceptable “Duplicate Content”

Syndicated Content

Business 2 Community is an online community that offers a variety of news related to social media, marketing, branding and other areas. B2C pulls their content from sources including original articles for the site and other blogs’ sites.

At the end of articles taken from other sources, B2C links back to the original content:

For this reason, the content on this site and other similar sites are not seen as harmful duplicate content. This is also similar for news sites that highlight breaking news, and subsequently have two of the same articles on different pages of the site.

Duplicate Content That Will Hurt Your Website

Scraped Content

Scraped content is taking content from a reputable site and publishing it as your own, without giving credit to the original author/source. This is an unethical practice, and from a user-standpoint, it often results in poor user experience.

When creating unique content, make sure you’re keeping your visitors in mind; provide your visitors and target audience with content and information that is useful and informational, or else there’s no point in providing content.

Manufacturer Product Descriptions

Not only is using manufacturer-provided product descriptions an example of duplicate content, but think about it: manufacturer product descriptions are distributed to multiple online stores. Your website will be competing with the manufacturer and many other websites who choose to copy and paste the provided content on their site.

Although it will take a great deal of time and effort, create unique content for each product description. It will be well worth it.

Depending on the size of your website and product inventory, I understand this may not be a feasible undertaking; however, I urge you to consider utilizing the “noindex, follow” meta tag on pages you don’t create unique content for. This will ensure you’re not penalized for duplicate content.

The Case for Original and Unique Content

When it comes down to it, your best bet is to create original and unique content for your website and blog. While there are some exceptions to the rule of duplicate content, the main idea behind content creation is to create useable content for your users. User experience is an important element of a website, and if your website isn’t up to par in the eyes of your visitors, they will go elsewhere for the information they’re seeking.

Remember, there are millions of websites out there; how do you set your website apart from your competition? Start with distinctive and original content. I promise you won’t be disappointed.